Data Pipeline Attacks

An excerpt from Secure Intelligent Machines

Abstract

As cyber threat actors increasingly target AI systems, the data pipelines used to train these systems will similarly come under attack as a potentially easier or more covert route to poisoning and altering the operation of these systems. This excerpt highlights technical methods for implementing stealthful training data manipulation through targeted adjustments in pipeline automation.

ISBN: 979-8-9877897-0-4

Introduction

An attacker could leverage acquired access to incorporate a robust and complex malicious script into a model’s data transformation pipeline. This approach would undoubtedly give the threat actor the most capability and control, but attackers often shy away from such an overt approach. The primary reason is to evade detection. This is a common attacker strategy, dubbed living-off-the-land attacks, where the goal is to make as few changes as possible to the environment and use existing capabilities configured for malicious purposes rather than risk detection from new scripts and tools. Similarly, introducing subtle changes to the pipeline will be far less detectable than the inclusion of malware or advanced attack scripts. This section provides insights into how an attacker may implement low-profile data-layer attacks that incorporate these principles.

Instance Removal

The easiest way to make something disappear in the eyes of an AI system is to remove the training instances that define it. This primarily holds true for supervised learning but could be applied to production data streams that pass a data pipeline before processing. Adding an explicit instance drop for rows meeting certain conditions is the most straightforward way to implement this hack. A more subtle and potentially less detectable technique may involve degrading instances containing information to be hidden so that existing data scrubbing processes will execute the purge process. For example, many ML models fail to train or offer predictions if datasets contain instances with null values. Data pipelines often address this requirement by dropping instances that contain null values. Implementing a data filtering attack can be as simple as replacing a single, innocuous attribute of select data instances with a null value. Listing 4.5.1 shows an example of such an attack, with text highlighted in red as threat actor additions to an existing pipeline.

Inline Data Alteration

Threat actors intent on minimizing certain characteristics within datasets while shifting focus to others can simply add in-line data transformation logic. Statements like those used to alter the size above can be used to relabel datasets or significantly alter the numeric scale of a feature. Example outcomes from such alterations include inflating stock price predictions by increasing monetary values of specific trades, significantly degrading an autonomous vehicle’s braking ability by altering timing features of training data, and relabeling drone data instances to benign bird instances.

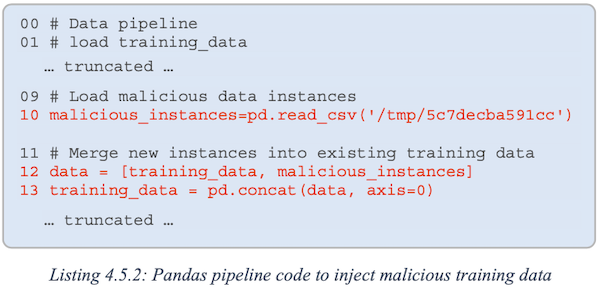

Data Instance Injection

Another form of in-line data alteration is automated data injection. In this attack, a malicious training dataset may be hidden in a pre- staging environment or similar system that operates a component of a data pipeline. The local pipeline configuration is altered to load and merge the malicious dataset into a training dataset before it is passed to the training environment. This allows ongoing poisoning of future training datasets and offers a modular approach where, after this configuration is in place, the attacker no longer requires modification to the pipeline or the AI data network to orchestrate changes in data poisoning outcomes. Instead, the attacker simply changes the instances in the maliciously injected file. Listing 4.5.2 provides an example implementation of automated data injection.

Theft by Pipeline

Pipeline file operations can also be used to skim pipeline data and stage it for exfiltration. Similar to the file read operation in the previous example, a file write operation may be included in the pipeline and configured to store the contents of a training data frame in a similarly obscure file location. Filtering logic can be added to enhance the file write operation to only store those containing sensitive information or intellectual property targeted by the attacker. Execution of commands to send stolen information off-network may also be included in the pipeline, but that action is typically set up as a separate scheduled task to minimize the chances of detection.

Pipeline Destruction

Finally, pipeline destruction requires far less subtlety and targeting. The effectiveness or impact, depending on perspective, of destruction attacks often depends on how readily recovery efforts can restore what was lost. System backup corruption and physical system damage are common destruction amplifier tactics but outside the scope of the AI cybersecurity program. A more AI-specific approach, however, would be the use of the AI data pipeline to orchestrate mass data destruction or ransomware-style encryption of datasets and data stores.